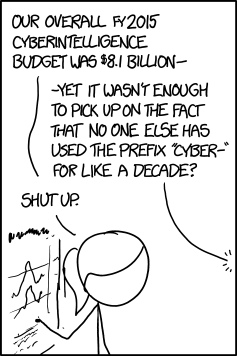

Ovenstående XKCD-stripe spejler mange internetmenneskers hovedrysten over statens og det sikkerhedsindustrielle kompleks' brug af præfikset cyber- om alting på internettet. De er ude af touch. Det er gammeldags. Sådan tænker vel de fleste - men jeg tror man går galt i byen, hvis det er det man tror det handler om.

Cyber- har vi fra cyberspace. For de fleste, er det et ord, der kom fra William Gibson og hans - ja, undskyld - cyberspacetrilogi, med gennembruddet Neuromancer som den vigtigste roman.

Men præfikset er ældre. Kilden til ordet er Norbert Wieners kunstord cybernetics - om den nye videnskab om feedback og kontrol han opfandt under og lige efter 2. verdenskrig. Order kommer fra græsk - kybernetes, som betyder styrmand - eller allerede hos de gamle grækere også metaforisk: Den der styrer. En leder.

Kontrol

Wieners kybernetik - og ordet cyber - handler altså meget præcist om én ting: Kontrol. Vores begreb om information - også affødt af 'the cybernetic turn' efter krigen er på sin vis en biomstændighed ved udviklingen af kontrol.

Kybernetikkens og informationens allerførste usecase var våbenstyringssystemer. Automatiske sigteapparater til hærens og flådens kanoner. Informationen skulle bruges til at etablere kontrol. Informationen var aldrig pointen, kontrollen var.

Og ordet cybers rod har aldrig forladt internettet, og har på intet tidspunkt været væk. At NSA og hele det statslige sikkerhedsapparat betitler alt internet med cyber, handler om at missionen ikke på noget tidspunkt har været væk - ikke om at de sidder fast tilbage i 80erne.

Selv ikke i Gibsons romaner er kontrol og magt på noget tidspunkt væk. Gibsons hovedpersoner er altid i kløerne på - eller agenter for - tågede stærkere magter; sådan lidt ligesom i klassisk græsk drama, hvor Odysseus og alle de andres historier i sidste ende er givet og berammet af guderne. Det er simpelthen urformen på Gibsons plots, fra Neuromancer over Pattern Recognitions til The Peripheral. Uafhængighed er en dyrt vundet luksus for de allerrigeste - og selv den har grænser.

Så grin bare af cyber- det ene og cyber- det andet. The joke's on you.

(Hvis man vil læse mere om informationens historie og computerens og nettets ditto så kan jeg kun varmt anbefale James Gleicks 'The Information' - eller man kan læse George Dysons 'Turings Cathedral', som også er god. Læser man begge vil man opleve en vis deja vu, men de er begge gode og forskellige nok til at være besværet værd)

Om en god times tid relauncher jeg Apollo 11 i din browser, for at fejre det største ingenørprojekt nogensinde foretaget, med atombomben som den eneste mulige undtagelse.

Ideen med projektet er, i disse 140-tegns tider, at give dig en chance for at opleve projektets enorme omfang, f.eks. bare missionens lange varighed. Det tager et par dage at flyve til månen, f.eks.

Når man selv arbejder med at lave nye ting, bare på en meget, meget mindre skala, må man tage hatten af for Apollo-projektets enorme omfang og ambitionsniveau. Da det kostede mest udgjorde Apollo-projektet hovedparten af NASAs budget, der var over 4% af det amerikanske statsbudget. Alt i alt brugte NASA omkring $100mia i nutidsdollars på at komme til månen.

Og de blev vel at mærke brugt meget hurtigt. Fra 1961 og frem mod månelandingen foretog NASA omkring 25 testflyvninger med forskellige delsystemer af måneraketten. Helt frem til april '68 fandt man fejl under tests, der havde slået astronauterne ihjel, hvis ikke man altså havde det omfattende pre-flight testprogram.

Den for mig mest glemte viden om Apollo-programmet, da jeg sidst genopførte månerejsen, var de to rejser ud til månen før Apollo 11. Apollo 8 havde bevist at man overhovedet kunne flyve sikkert ud til månen og hjem igen. Apollo 10 var en decideret generalprøve. Ud til månen, ombord i landingsmodulet, gennemføre en flyvning højt over månen i landingsmodulet - og så retur uden at sætte landeren ned.

Det enorme budget, og de enorme ambitioner er en ting, men det helt store lærestykke for mig i projektet er, hvor tæt på månerejsen, der stadig var store uløste udfordringer, og så hvor forsigtigt, metodisk og kostbart man til syvende og sidst gik til værks. Masser af testflyvninger. Alle dele testet uafhængigt, før de blev testet integreret. Ingen nye landvindinger taget uden en fuld integreret test af skridtene før.

When Gutenberg died the paper book had well over 500 years of life left. When James Watt died, the steam engine had over 150 years of useful life left. A couple of weeks ago Howard Scott, one of the creators of the LP, the long playing record, died - having seen his invention cease into a niche product well before his death. When Bill Gates dies, Windows will be long gone. The lifetime of technologies is dropping dramatically. Where once, inventing something meant a life in eternity, it now just means 'a few years of relevance'. It's becoming harder and harder to leave a mark on society that will outlast you, if that's the kind of thing that motivates you.

News

Just not what you want. Too much 'Made You Look!'. Too much rehashing. Too much process journalism. No consequences. No real world building. No real analysis, just storytelling. I'd like less of what I get and all of these. Also: It's not on my Kindle, so not on my phone and not on my forthcoming Kindle Paperwhite, readable in bed, after my girlfriend turned the light off.

Feeds

It's one thing that not enough sources output them anymore, but the feed reading process is broken. I've recently recovered from feed reading bankruptcy after about 2 weeks of not attending to my feeds. The experience was horrible; too much piling through the same shit, too much piling through shit I've actually already seen on Twitter or Facebook. What I'd like from my feed reader is higher relevance and "scale adjusted relevancy". When I don't have the attention, I want the required attention to scale so I can catch up in one go on 14 days, not have to do 14 days of feed-tending work. My feeds should be a newspaper. A week old feed-surface should be a weekly magazine. A month old feed-surface should be a monthly magazine. A year old feed-surface should be an annual review. None of this should require anything but collaborative work.

Blogs

Well, mine is anyway. What's particularly broken about is it subpar integration with the flows, and the absence of readership after everyone moved to flows.

Shutting down the client competition and innovation. Shutting down feeds and unauthorized access. Shutting off access to the follower graph. This is all bullshit. Twitter has become old media, like Edd Dumbill says, and is as broken as old media.

Are the fixes for all of these connected? Is it one-true-thing? I think I have a dream about what it is. It's blogs again, but this time hooked up to a 'reasonable' aggregator with a mission to act as an infrastructure company. The aggregator connects islands of users to form a distro twitter. The aggregator is uniquely positioned to sell firehose access to the social feed, to the graph, to collaboratively enhanced shared links. It Just Might Work.

I'm imaging this getting built off an open source conversational platform - you're allowed to think Diaspora - which initially thinks of itself as a caching hosted twitter client, but really is intended to allow other hosted islands of friends to connect. I think Diaspora got it wrong by inventing too much, and claiming too much. What I really want is just to leave, without slamming the door shut.

Is Instagram even a tech company? I'm not trying to be flippant here. I find this a real and interesting question. There's been hundreds of succesful social image sharing companies before and there's going to be a hundred more. In that sense, Instagram is just a recent hit in a string of hits.

In fact, Instagram's engineers keep it simple on purpose. They try to invent as little as possible.

The consumer side of the mobile revolution is more a media revolution than a technical revolution. Most of the hits are simply new rides at the amusement park, or fresh hit singles, because we got bored with last years' hits.

Isn't Instagram more the Angry Birds/Rovio of photos than the Apple of photos?

That's not to take away from Instagrams colossal succes, more power to them! It just means that we need to evaluate the succes differently. Instagram didn't disrupt or disintermediate or transform or restructure anything. Instagram entertained and connected a lot of people for some time. That's a different function than a typical tech company. It doesn't generate the same kind of aggregate benefits more and more tech output from a company does. It doesn't produce the kind of grinding deflationary pressure on older technologies, other companies, other kinds of photography, for instance, that we're used to. Sure, Kodak is dead - but didn't Apple do the killing? Was it Instagram? Weren't we sharing visuals at about the same clip before - just on Facebook + Photobucket + Twitpic + Yfrog + Flickr and on and on.

What's your take? Is Instagram transformative - or just this really nice way to share photos right now....

This video, with delicious robo-voice, sounds like viral advertising for some razor-smart near future sci-fi film, but is in fact an a real promovideo for an ad-hijacking server discovered in the wild by Justin Watt during a recent hotel stay. Movies routinely sell the in-film ad space to companies, so it's to be expected that this will happen in a fully mediated reality as well. Still great sci-fi fodder. Our lives online are subjected to unwilling full on transparency, while the transparency of the layers of tech beneath our world of ends degrades. Network neutrality is all about this kind of rewriting. Throttling is just another kind of rewrite.

En slags follow up om det dybere og dybere vand.

Sidste år skrev Marc Andreesen et meget læst essay i Wall Street Journal om hvordan software er ved at æde hele verden Den umiddelbare aflæsning af essayet går på, at Moores Lov og den stadig mere sofistikerede hardware rundt om os til stadig lavere priser gør at softwaresiden af... ja, af hvadsomhelst, fylder mere og mere. Apples revolution af mobiltelefonien går ud på at lykkes med at designe noget hardware, der pludselig gør telefonen til et effektivt og dynamisk apparat til at levere software på. Det handler ikke særlig meget om, at Apples telefon - som telefon - er bedre end hvad Nokia havde før. Så langt så godt.

Men det modsatte pres er ligeså relevant: Jo mere kultur vi gror rundt om os; jo flere processer, jo mere udtryksfuld bliver den kultur. Og efterhånden som udtryksfuldheden går op, sker der et kvalitativt spring: Kulturen bliver software. Wired online har en fascinerende historie om sådan et fænomen - om hvordan den kinesisk-/taiwanesiske IT-maskine med sin tiltagende sofistikation tager livet af de integratorer, HP, Cisco, Juniper, der hidtil har været specialiserede i at samle komponenterne til brugbare produkter. Komponentindkøbsprocessen er simpelthen på vej til at blive så sofistikeret at der ikke er nogen grund til at gå gennem integratoren - man kan lige så godt få leverancen direkte fra fabrikkerne. Leverandørerne bliver mere og mere til software - i stand til at integrere efter behov for brugerne. Integrationen i sig selv er gået fra at være hardware - Ciscos, HPs, Junipers, til at være software i købsprocessen.

Here's an extremely interesting slide deck(pdf) on the opportunities in chip design, if we allow a little more of the physical characteristics of the chips to play a role in the programming interface. Turns out symbolic simulation of floating point math (i.e. real numbers) is extremely compute expensive when you consider that the physics is naturally 'solving' these equations all the time, just by being, you know, real physical entities.

The 'only' cost of a projected 10000x improvement in efficiency is a 1% increase in error rate, but if you change the algorithms to suppose a certain level of error - a natural notion in the realm of learning algorithms and AI-techniques - that's not really a problem at all.

The reason this is important, is, that we're moving from symbolic computing to pattern matching, and pattern matching, machine learning, AI and similar types of computation all happens in the real domain. A 10000x advance from more appropriate software buys about 13 applications of Moore's Law - something like 20 years of hardware development we could leapfrog past.

A few years back I wrote down a guess - completely unhampered by statistics or facts - that in 10-15 years 90%-95% of all computation would be pattern matching - and I stand by that guess, in fact I'd like to strengthen it: I think, asymptotically, all computation in the future will be pattern matching. This also ties into the industrial tendency I was talking about in the previous post. Increasingly, filtering is where the value of computation comes from, and that makes it highly plausible we'll see specialized chips with 10000x optimizations for pattern matching. Would Apple need to ship any of Siri's speech comprehension to the cloud if the iPhone was 10000x more capable?

Postscript: Through odd circumstances I chanced on this link the exact same day I chanced by this report(pdf) on practical robotics. I'll quote a little from the section in that called 'Let The Physics Do The Walking':

Mechanical logic may be utilized far more often in Nature than we would at first like to admit. In fact, mechanical logic may be used for more in our own robots than we realize[...] Explicit software we originally envisioned to be essential was unnecessary.

Genghis [a robot] provides a further lesson of physics in action. One of the main reason he works at all is because he is small. If he was a large robot and put his foot in a crack and then torqued his body over, he would break. Larger walking machines usually get around this problem by carefully scanning the surface and thinking about where to pyt their feet. However, Genghis just scrabbles and makes forward progress more through persistence than any explicit mechanism. He doesn't need to build models of the surface over which he walks and he doesn't think about trying to put his last foot on the same free spot an earlier foot was placed.

Sometimes general computing is just too general for it's own good.

Let it be known, that this Morten Lund-blogpost and this 4-year old classy.dk post are talking about the same thing, only differently.

Hvad skal vi leve af i fremtiden? Det er et spørgsmål, der trænger sig levende på her i krisen, hvor jobs forsvinder og de nye fag ikke formår at lægge nye jobs til.

Weekendavisen havde for nogle uger siden, en serie om det, påstås det, skjulte Produktionsdanmark ude vestpå, der hvor alle fabrikkerne ligger. Og der hvor alle arbejdspladserne tabes i disse år. Det er næppe noget tilfælde at artiklerne er skrevet af Jesper Vind Jensen, der vist voksede lidt længere nede af den samme gade i Ribe vi boede på.

Jeg ved ikke om jeg køber præmissen om at det vestlige industri-Danmark lever skjult, men reelt er det at der ryger en masse faglærte og ufaglærte jobs på fabrikkerne i de her år - og at de nyligt arbejdsløse fabriksarbejdere næppe bliver omskolet til symbolanalytikere lige i morgen, med lovende jobudsigter i organisationsudvikling og kommunikation nede på kommunen.

Det er jo noget juks, og som teknolog, en af de, der arbejder i mandskabsfrie, nærvirtuelle virksomheder, er der naturligvis lidt ekstra at tænke over, hvis man ellers godt kan lide det her og også ønsker naboen velstand og et godt liv.

Artiklen fik mig til at tænke på hvad for nogle jobs sådan nogen som jeg selv egentlig er med til at skabe. Jeg har arbejdet 4-5 forskellige steder. 3 af dem så tidlige projekter at der ikke rigtig foregik andet end udvikling, og de to andre "rigtige" virksomheder med omsætning og den slags. Så længe man er i de små udviklingsvirksomheder så producerer man ingen jobs, udover det man selv har. Jobskabelsen starter først når der faktisk er omsætning; så skal man have sælgere, supportere, regnskabsfolk osv.

De steder jeg har arbejdet var development/andre mixet noget i stil med

- 1:5 i SimCorp, som var en rigtig biks med rigtige kundekonsulenter og overskud

- 1:7 i Ascio/Speednames, som havde omsætning, men et ret permanent underskud i nogle hårde kriseår i starten af århundredet

- 1:0 i Imity

- 5:1 i Zyb, som forærede varerne væk, men dog havde en brugermasse

- 1:1 i Carecord, som har et mere fornuftigt startup setup end vi havde i Imity

Ingen af stederne - undtagen i Ascio - har jeg været mere til at producere en fornuftig mængde non-tech jobs. Jacob Bøtter ville i en serie tweets have den type virksomheder, der ingen jobs skaber, til at være en ny økonomisk verdensorden, men pointen er jo lige præcis at de ikke bliver en ny økonomisk verdensorden! Der udbetales formodentlig mere i løn til de supermarkedsansatte på Vesterbrogade end til de IT-startupansatte, uanset at supermarkedet betaler dårligere.

Jeg sad for noget tid siden over en middag og diskuterede hvad teknologi gør ved ens job, og konklusionen er brutal men indlysende. Teknologis hele virkemåde er at slide de ansattes kompetencer ned. Det er ikke fordi teknologien forsvinder med tiden.

Jeg læste engang at Bill Gates på et tidspunkt havde sagt til en amerikansk politiker noget i stil med "hvad angår landets fremtid, så kan I ikke satse på os. Der skal bygges nogle biler. Der skal fabriksgulve til." - underforstået; vi i tech kommer ikke til at skabe arbejdspladser der ikke kræver tech skills. Der skal skabes nogle jobs med at flytte rundt på nogle atomer, der vejer noget, hvis vi skal have fuld beskæftigelse her i landet. Så hvis vi skal tage den påstand alvorligt - hvilken startup skal man så gå ind i næste gang?

On the eve of Jobsos exit, let's have another look at the famous video where Steve Ballmer responds to the iPhone

The interesting thing here actually isn't how ridiculously wrong Ballmer was about the success of the iPhone, but rather what he says about his own product - a recent winphone from Motorola

It's a very capable machine. It'll do music, it'll do internet, it'll do email, it'll do instant messaging[...]

It'll do. Everything about that statement is wrong, from the words 'capable' and 'machine' to the itemized lists of features on the phone that'll do. Nobody wants something that'll do. Well, maybe toilet paper.

It's a chestnut of interface critique: Embodiment is good, the concrete beats the abstract, nobody reads online. It drives interfaces towards the tangible, and I'll be the first to agree that good physical design (and design that *feels* physical) is pleasurable and restful on the mind.

None of these facts are, however, easy to reconcile with the fact that every day 15% of the queries seen by Google are queries Google has never seen before. Put differently, the information space Google presents to the world grows by 15% every day. Imagine a startup experiencing this kind of uptake. You'd consider yourself very lucky - even if a lot of those 15% will be spelling mistakes etc.

The 15% number sounds staggering, but it's when you compound it a little it becomes truly mindblowing - and in fact hard to believe entirely - 15% daily discovery means that in a month, the entire current history of Google searches fades to about 1% of all queries seen. Obviously this isn't a description of typical use, but it is a description of use, none the less. This is complete rubbish and I'm emberrased to have written it, read on below

Now, try to imagine building a physical interface where all uses it has been put to, since the beginning of time, fade to 1% in a month. That's very hard to do. The thing is, that thinking is different, language is different, information is different. The concrete approach breaks down when confronted with the full power of language.

This is also why we'll always have command lines.

COMPLETE RUBBISH ALERT

So, above I make a really embarrasing probability calculus 101 error, when I tried to compound the "every day we see 15%" new queries statistic. This isn't a toin coss, but something completely else. Chances are that "every day we see 15% new queries" compounds on a monthly basis to .... 15% new queries. To see why, I'm going to make a contrived draw of numbers that match the "every day we see 15% new queries" statistic.

Let's suppose we wanted to produce a string of numbers, 100 every day, so that we can say that "every day we see 15 numbers we haven't seen before". The easiest way to do that is to just start counting from 1, so the first day we see the numbers 1..100. Of course on the first day we can't match the statistic, since we haven't seen any numbers before.

On the second day however we draw 85 times from the numbers we have already seen - we just run the numbers 1..85 - and for the remaining 15 we continue counting where we left off on day 1, so on day 2 we would have the numbers 1..85,101..115. On day 3 we run 1..85,116..130 and so on.

This way, it's still true that "every day we see 15 numbers we haven't seen before" but at the end of the first month (30 days) you will have seen in total the numbers 100+29*15 = 535 numbers.

In month 2 (let's say that's 30 days also) we change things a little. Instead of just running through 1..85 we continue upwards until we have cycled through all the numbers we saw in month 1. There were 535 of those, so that'll only take 7 days. You'll see 30*15 = 450 new numbers and 535 old ones when doing this or 46% numbers you've never seen before of all the numbers you see in month 2.

In month 3 (still 30 days) we do the same thing as we did in month 2, but this time there are 535+450 old ones, so the 450 new ones only amount to 31% of all the numbers we see in month 3.

We continue like this. The most already seen numbers we have time to run through doing 85 a day for 30 days is 30*85, and we'll still have 30*15 new ones, so lo and behold, when we continue this process we end up seeing 15*30/(15*30+85*30)=15*30/(15+85)*30=15/100=15% numbers we have never seen before.

Once in a while it's worth wondering what profound changes we're in for in the next decade if any. With that in mind, what's going to be the most common prosthetic in 2020 that none of us have today? Phones and smartphones are out of the running - we already all have those. Tablets are almost out of the running - or they would probably be the answer.

Let's exclude them - then what is it going to be? Or is the question wrong - like asking "what will be the most popular programming language in the home in 1990" in 1978? Will evolution be elsewhere? Won't technology be evolving in the prosthetic space at all?

My professional bet is on biohacks, but that might just be a little too science fictiony for a while to come. Other than that a swarm of chips around the phone seems likely to me. iPhone ready jackets and watches and glasses and pockets. 2020 might be too close for that. It might take another 5-10 years.

Working under the assumption that Marc Hedlund's post - on why Wesabe didn't make it as a personal financial aggregator - is accurate, there are a couple of superficial conclusions: At play in success are convenience, utility and perceived utility - which is not the same thing as actual utility.

By Hedlunds reasoning, Mint was way better at perceived utility than actual utility, having a low quality of imported financial data, but was clearly more convenient.

Supposing only these three things are in play, there are two possible conclusions: Utility doesn't matter, only perceived utility does. And the other one: Convenience is essential. You don't need to compete on utility above a basic threshold, you need to change the rules a little, so people care about your product at all, and then you simply win on convenience after that.

When I've been doing stuff with Morten, we've always had a basic dividing line in how we are interested in things, which has been very useful in dividing the work and making sure we covered the idea properly. Morten is intensely interested in use, and I'm intensely interested in capabilities and potentials.

Any good idea needs both kinds of attention, so it's good to work with someone else who can really challenge your own perspective. If only we had a third friend who was intensely interested in making money we could have really made something of it. It's never too late, I suppose.

Anyway, my part of the bargain is capabilities. Yesterday evening, and this morning, I added another year worth of lifetime to my aging* Android phone, one of the original Google G1 development phones.

It's a slow, old device compared to the current android phones. Yesterday, however, by installing Cyanogenmod on the phone, I upgraded to Android 2.2 - Froyo - and boy, that's a lot of capability I just added to the phone**.

First, about the lifetime: Froyo has a JIT, an accelerator if you're not a technical person, which makes it possible for my aging phone to keep up with more demanding applications, expecting better hardware.

Secondly, Froyo is supported by the Processing environment, for experimental programming, so now I can build experimental touch interfaces in minutes, using the convenience of Processing. This makes both Processing and the phone infinitely more useful.

Thirdly, Froyo has a really nice "use your phone as an Access Point"-app for sharing your 3G connection over WiFi***. I had a hacked one for Android 1.6 as well and occasionally this is just a really nice appliance to have in a roomfull of people bereft of internet.

Fourth, considering that Chrome To Phone is just an easy demo of the cloud services behind Froyo it sure feels revolutionary. Can't wait to see people maxing out this particular capability.

Fifth, and it feels silly to have to mention this, but Froyo is the first smartphone HW/SW combo you can add storage in a reasonable way, i.e. moving apps + everything to basically unlimited replaceable storage.

On top of all the conveniences of not being locked down, easy access to the file system, easy backup of text messages and call logs; this feels like a nice edition of Android to plateau on for a while. If the next year or so is more about hardware, new hardware formats, like tablets, and just polishing the experience using all of these new capabilities, I think that'll work out great.

* (1.5 yrs old; yes it sucks that this is 'old'. We need to do better with equipment lifetimes)

** I'm going to but a couple of detailed howto posts on the hacks blog over the next couple of days, so you can do the same thing.

*** For Cyanogenmod, you need this.

A couple of minutes ago, the technician got done installing the new remote read-off functionality on our heating system, here at my coop. This ends the need for annual manual read-offs by the coop board (i.e. me) and estimated usage numbers. Presumably the heating company will also be able to tell us interesting stuff about how we spend our energy dollars from the new masses of data they will have available.

Out of curiousity, I asked the technician how the system communicated with the outside world. The answer was simple and obvious, there's a GSM modem in the remote read-off module.

There are two things that interest me about that: Firstly, the infrastructure wasn't built for this. The application could never pay for suitable infrastructure on its own, but as soon as the infrastructure is already there, this becomes a very hard problem with an extremely easy solution. Infrastructure fixes problems in a completely different way than engineers do. Secondly, embedded computers in Copenhagen have access to about the same level of infrastructure - or better - as entire villages in sub-saharan Africa.

I took a look back at my weblog entries for 2003. "Only 7 years ago", it's easy to think, but frankly, reading them, they feel positively pre-historic. I hardly recognize my language or interests from back then.

Let me take you back to the year 2003 in the context of infrastructure.

Back then we were talking about replacing RSS with Atom, because everybody not Dave Winer were in some kind of argument with Winer. We were debating what to call Atom in the first place. This is infrastructure that has clearly gone to the background as we've moved our stuff into silos. I'm a little sad about this, but the kind of fighting that the RSS/Atom battle is an example of, is why silos sometimes make more sense and simply win. We need our infrastructure back from the silos in the next 1-4 years.

There were tons of upstarts, and huge debate, over public WiFi. Incumbents - both those providing internet services and mobile information - didn't want public WiFi to happen. In general we were all talking about a dreamy future with data everywhere, which clearly hadn't materialised yet. Today the public WiFi plans seem like a quaint, patchy solution to a problem that got a better infrastructure solution later on. Not that the alternate terror-free future with abundant, communal WiFi everywhere wouldn't have been great, but we seem to be making a reasonable go of it in the future we actually got.

This pattern, of "patchy, but possible" local solutions to problems, that we since get a global fix for from infrastructure, is recurring. In 2005-2007 location was one of these. Plazes - location from WiFi - was a good idea that has since become almost completely irrelevant. Infrastructure has cut that problem differently; now the important thing is access to social data - how can I conveniently socialize a particular place - not location data, since location is now an ample resource (whereever WiFi is, anyway)*. We didn't have maps, and they weren't free.

2003 was also the year we first heard about Skype. I wrote about it (in Danish) and got a reply to the effect that "I don't believe in it. I already have a phone", which is a lot like the classic responce Xerox got way back when they were trying to sell photocopiers for the first time: "But I already have a secretary".

The old rationale doesn't become less rational, because of the new technology - it's about all the the new rationales that suddenly make sense. Last I checked, Skype accounted for 12% of all international calling.

Also, in slightly different infrastructure, 2003 was when Google started rolling out AdSense, so we got a first stab at how online media were going to get paid. There was a lot of clearly unfounded optimism about this, and the world has basically moved on, even while totally assimilating to AdSense. Now we're talking about stuff like Flattr instead. Early results are at least interesting.

And to think, that back in 2003 we weren't even dreaming about iPhones, iPads and Kindles. About AppStores. Or Twitter or Facebook or YouTube, for that matter. Sounds to me like we simply missed the places for people. Including markets.

*It's worth noting here, that location technology is actually still a patchy mess of Skyhook WiFi assisted location + GSM cell assisted location + GPS proper, but those are technicalities. The key thing is that the abstraction is in place, and is good enough, that we just believe in it.

I have similar feelings about what we did with Imity back in 2006, btw. Location has taken care of most of that problem. Infrastructure cut it up in a different way, than what we had planned for.

Hvis man gerne vil vide hvorfor offentlige IT-projekter bliver så dyre og dårlige, så kan man starte med at læse her. Det er sund fornuft at have en institution som Datatilsynet, men hvis udgangspunktet er not-invented-her syndrom som f.eks. udtalelsen "Allerede det forhold, at medarbejdernes login sker uden digital signatur, gør, at den er vi ikke med på", som nævnt i artiklen, så umuliggør man en fleksibel adgang til at prøve med ny teknologi i de offentlige IT-systemer. Farvel til de utallige fordele vi andre høster på Facebook, Twitter o.l.. De kommer aldrig i spil i offentlig sammenhæng.

Det bliver et enormt problem, i et land hvor den offentlige sektor er så stor. Hvis vi skal have vækst og velstand i Danmark, så er vi nødt til at finde ud af at bruge IT så godt som muligt, og så hurtigt som muligt, pg så er det en katastrofe at halvdelen af vores økonomi simpelthen har annuleret innovation på den måde.

De der gider lege med på den form for defensivt kravrytteri tager sig godt betalt for det, og når de defensive kravlister får lov at vokse uden begrænsninger, jamen, så bliver det dem og ikke anvendelighed eller nye funktionsmåder, der kommer til at bestemme de webtjenester vi får.

Det bizarre her er at lovgivningen - og Datatilsynet - ikke skelner mellem data, som der er tale om her - elevplaner - og så de virkelige kernedata i systemet, vores skattedata osv. Hvis man fryser alle vores mellemværender med staten på Fort Knox-niveau, så lukker man ned for en masse kreativitet ude i kanten. Tænk nu hvis man tog det lige så sikkerhedsbevidste syn på sagen, at man skal arbejde på at undgå at risici for noget så harmløst som elevplaner eskalerer til alvorligere områder.

So Apple passed Microsoft in market cap the other day, and the really interesting thing about it was that it wasn't really bubbly stock market frenzy that did it. Apple is trading at a 21.50 P/E which isn't absurd, even if it is higher than Microsoft's.

Why is Microsoft stalling? Because they already won.

Some years back, before she was fired from HP, Carly Fiorina made a remark about the future of HP to the effect that since the market for PCs is pretty much completely saturated already, you can't really expect the tech sector to outgrow the economy as a whole. Companies are already spending all they can on IT. There's a soft ceiling somewhere, for how much of your revenue can go into tech spending and the corporation is approaching that level.

Microsoft gets all it's revenue from this no longer expanding slice of tech spending, so there is simply no way to grow beyond the few percent of general growth.

The deflationary power of technology can push these limits a little, but the key deflationary force these days isn't the PC as such but rather the internet and the networking of businesses.

Apple on the other hand has a lot of market share to gain in laptops and phones. The only saturated Apple-market is digital music players.

Sidste uge mødtes en lille gruppe mennesker og diskuterede Apples nye lukkede regler for brug af iPhone-platformen og Facebooks massive landgrab annonceret under den nyligt overståede F8 konference.

Snakken var god, men en smule svær at referere. Det kunne være blevet en meget aktivistisk samtale om principper, men det blev istedet en forholdsvis bred samtale om hvordan teknologiverdenen egentlig udvikler sig, i en dynamik mellem markedet - der er bedst til "dyb" innovation - og de enkelte virksomheder - der er bedst til fokuserede hurtige fremskridt. Vi snakkede om at der er en dynamik mellem de to måder at udvikle på. Virksomhedens forsøg på kontrol skaber værdier for virksomheden, men provokerer også konkurrence andre steder, og i det lange perspektiv udligner den slags sig så.

Virksomhedsperspektivet på åbenhed vil være at det er nødvendigt for at vokse - i et stykke tid. På et tidspunkt vil det ikke nødvendigvis, for den modne virksomhed, give mening mere, fordi det ikke genererer mere vækst - og så er det fokus flyttes ud på markedet igen, hvor åbenheden kan producere innovation.

Så diskuterede vi om der trods alt er noget anderledes ved de nye nær-monopoler. Ved Google, ved Facebook og ved app-storen. Om man kan udkonkurrere den enorme akkumulation af data som f.eks. Google har. Konsensusformodningen efter et stykke tid var nok "ja", men det tager helt klart tid.

Vi snakkede om hvad man bør stille af krav til sine platforme, for ikke pludselig at være med i en lang sej dødskamp, hvor kontrollen strammes for at tjene flere penge. "Der skal være en kattelem", sagde Niels Hartvig, og brugte sit eget Umbraco som eksempel. Der er ingen tvivl om at Umbraco-projektet primært drives af den sponserende virksomhed Umbraco, men kode er kode, og hvis balancen mellem Umbraco - virksomhedens - interesser og Umbraco - open source CMSet - bliver uspiselig for nogle af deltagerne/brugerne af projektet, så kan de gå deres vej og tage koden med sig. Det vil koste, måske, på innovationstempoet, men ikke på virksomhedens strategiske beslutninger.

Vi diskuterede også hvordan man ikke skal glemme at nogle af de her truende monopoler er blevet monopoler ved overhovedet at etablere et marked, og det er lige præcis det en god privat sponsor kan bidrage med. Det er Amazon, der med Kindle har skabt verdens første volumenmarked for e-bøger. Det er Apple, der med iTunes og App-store har skabt markeder for mobil musik og software. Det er Google, der har skabt moderne søgemaskinemarketing. Man kan ikke bare sådan på principper sige "åben=godt". Der er nogle kvaliteter - transparens etc. - der er gode for markedet, og nogle problemer når markedsdeltageren pludselig også er med som markedsdeltager f.eks., men det er ikke så sort hvidt.

Dynamikken er mellem de krav vi andre stiller til markedsdanneren før vi synes der er lavet et økosystem, og så de krav markedsdanneren har, før det overhovedet giver mening at lave et marked.

Vi snakkede om hvordan modstillingen "åbne platforme" vs "dybt integrerede, brugervenlige platforme" som man set lavet for Apple-casen, er forkert. Der er masser af convenience og brugervenlighed i at være åben; at lade folk drage de fordele af f.eks. iPhonen som de gerne vil.

Vi snakkede forbløffende lidt om Flash og de konkrete forhold v Apples særlige lukkethed. "Langsigtet dynamik vs direkte kontrol" opsummerer balancen i kontrollen fint der, kom vi vist frem til. Vi diskuterede om Apples argument at "kontrol giver bedre software" overhovedet passer. På kort sigt måske, men på langt sigt udelukker man innovation, og der er plausibelt at det gør platformens langtidshorisont mere ustabil, end den ville være som åbent marked.

Endelig så snakkede vi om, for tilfældet Facebook, hvorvidt Facebooks sociale graf er lock-in eller ej. Hvordan beskytter vi os mod en alt for absurd switching cost engang i fremtiden. Thomas Mygdal foreslog et par spørgsmål om det at runde aftenen af med.

Forslag til hvordan man kan lade være med at aflevere ejerskabet til al den adfærd Facebook samler ind. Til hvordan man kan satse på en distribueret identitet, istedet for den Facebook-identitet, der lige er blevet aktiveret på tusindevis af websites, og endelig om ikke Facebooks seneste afprivatisering af data vil føre til en øget offentlig opmærksomhed på om firmaet overhovedet lever op til databeskyttelseslovgivningen rundt om i Europa.

Der var almindelig tro på at der også er en vekselvirkning mellem det distribuerede og det monolitiske/monopolkontrollerede, men stor spredning i budene på hvad tidshorisonten så er for at det flipper over til mere individuel kontrol igen. Sådan som hovedregel, tror jeg budet var, at der måske er en 5-års horisont tilbage at være ubekymret monopolist i for Facebook.

[Sådan så aftenen ud, set fra min notesblok. Det er farvet af den ballast jeg selv kom ind i samtalen med, og dækker naturligvis ikke hvad alle de andre har tænkt; samtalen var god og mangfoldig. Men en slags referat er det dog]

So, the last couple of Facebook UI changes have been about making Facebook more Twitter-like, stream-oriented and conversation-oriented. With the recent announcements from Twitter's development-conference and Facebook's new stuff today however, the two companies are on clearly divergent paths

Twitter's future is about enhancing the value of real time*.

Facebook's future is about enhancing the value of identity**.

This is pretty much how it used to be, before Facebook did all that work to fend off a perceived threat from Twitter I guess, but having the two companies formulate their future vision within just a couple of weeks makes it clear how completely different they are.

* with more data and new relevance engines.

** sharing the identity to a broader audience than now, making it easier to mix in richer data with a profile.

It seems we're in for a reversal of the privacy defaults on the web, unless you want to stay off the social media most of the time. Facebook is reversing the default anonymity we're enjoying now. Sure, doubleclick maybe already did that a decade ago, but at least we've had the illusion. That's all gone now.

This means that the content filters that were previously only relevant for walled-in corporations who did not want their frivolous employees to do frivolous non-work suddenly become relevant for all of us.

It's okay to wear a badge as long as we stay in Disneyland, but we'd probably like to take it off when we're passing through the red light district.

I see a great new market here for the filter vendors. Personal reputation management.

Why is the web landscape different today than it was in the mid 90s? Is it because desktop computers are way better? Is it because our idea of what makes a good website has changed? No. It's the tools, stupid. You can read Joe Krause's post on building Excite vs building Jot as an example. The tools available in the 90s were expensive and cumbersome. Now, there's intense competition between largely free and very capable web frameworks, and on the server you have Apache and MySQL on commodity hardware to lower the price, which makes completely new services feasible.

This is what makes the web such a dynamic place. If you look around at the trendsetting web startups today, practically none of them use tools that even existed 10 years ago. They've switched to tools that are cheaper, but more importantly, tools that are way more efficient for the development team to use. jQuery and Prototype are improvements over roll your own AJAX frameworks. Small teams are reaping huge productivity benefits from Django and Ruby on Rails. Some have moved on from these, even.

Yesterday Apple turned off this particular engine of creation on the iPhone platform. You won't be allowed to innovate toolwise on the iPhone.

Apart from this being hideous, on openness grounds, it seems profoundly stupid. Nobody is using Flash to piss people off. Flash is used because it's an efficient way to grow rich media applications fast. This is important. Last year I wrote about the world of good it did for the ARToolkit to make the migration to Flash. Making a very useful library available in an efficient creative environment suddenly made this previously stale technology relevant again.

Likewise with Rails and Django. The specific needs of these popular frameworks have led to a revolution in the underlying webframeworks as well. Apache is no longer the sine qua non of the open source web stack.

It is simply not the case anywhere that most of the useful innovation around an open platform comes from the platform owner/sponsor/creator.

The notion that keeping people on inefficient Apple tools will make apps better is plainly ridiculous.

That Apple values corporate control over the value extraction on the iPhone platform over innovative pace is a bad omen for users and developers.

[Update: Jean-Louis Gassée's post on the matter is good, doing a way with the silly "good for users" defense and simply framing it as a questions of owning the momentum. ]

[Update II: Here's the thing: This wouldn't feel half as rotten if those had been the rules from the beginning, it's the shifting ground, the bad stewardship of the market that really takes the cake]

The conversational revolution of the internet during the last decade has brought about a lot of companies trying to engage with us at a different level than just being corporations. Instead of dehumanizing Terms of Service legalese and Acceptable Use Policies we're treated to an onslaught of microcopy as social markers, that this is a place where people deal with people and we don't need a contract. We could call these social disclaimers.

The problem of course is that some times we just want the frictionless and guaranteed behaviour of the market, instead of conversational engagement.

My list of the top three most overdone social disclaimers is here

- Cuteness. It doesn't work when you cut yourself on something broken after the cute overdose

- We hate spam too. Unless this means "we will actually never send you any email to this email address" you probably don't mean the same thing I do, and I stopped trusting these messages years ago. It's a polite fiction, at best

- We're people like you. This kind of signaling used to indicate that we belonged to the same monoculture as the people selling to us, but it's too old and too common to carry that meaning any more - just like bloggers stopped being "people like me" some time around 2005.

De forskellige reaktioner på håndmixeren, som jeg opfandt forleden, var en reaktion på den sædvanlige vi-snakker-om-nye-ting bølge på nettet, aktualiseret af Google Buzz, men ellers senest set i forbindelse med iPadden og sidste år med Google Wave.

Dag 1-reaktionerne på nye ting er som regel helt i skoven. Det er en blanding af at man ikke selv kan høre forskel på de rigtige ting, der trods alt bliver sagt, og så alt det andet - og så en række standardforvirringer i forbindelse med ny teknologi

- Vurdering ud fra eksisterende rationaler ser man tit, den er endda taget til protokol i klassikeren Innovator's Dilemma. Det nye vinder ikke frem ved en punkt-for-punkt substitutering af det gamle, så det er som regel forkert at vurdere i et pointsystem, der allerede har en udpeget vinder. I Buzz eksemplet er det den ret meningsløse "skal jeg så droppe Twitter?"-diskussion.

- Forvekslinger af mål og midler lyder som en nem begynderfejl, men det er det ikke, vi gør det allesammen hele tiden - det ville være uhyggeligt at skulle klare sig uden. Det er en del af hele den menneskelige tænkeevne. Mål er tågede og flydende og usproglige og omskiftelige. Midler er nemme og håndterbare og til at udpege. Så vi formulerer os gennem midlerne, når vi egentlig tænker på målene. Vi 'googler' og på engelsk xeroxer og hooverer man, fordi det konkrete er nemmere for hovedet end det abstrakte. På alle planer.

Sådan starter de meningsløse diskussioner om hvordan man retweeter i Buzz fordi det er for abstrakt at diskutere hvordan opmærksomhedsøkonomien virker i Google Buzz. Desværre bliver det hurtigt til en masse alt for bogstavelige sammenligninger af ting, der egentlig ikke behøver være beslægtede. - Kognitive nulsums-spil fylder altid meget på dag 1. Hvem taber, hvis det nye vinder? Som regel er det meningsløst af de to grunde ovenfor: Det nye har, hvis det er ordentligt nyt, ikke samme mål og midler som det gamle, så man kan ikke skære den på den måde. Og verden er i øvrigt ikke designet til at gå op, den er et gigantisk marked eller en økosfære af ideer.

- Teknologisk totemisme kan vi kalde det, når noget gammelt eller nyt tilskrives en uhyrlig række af konsekvenser, der i virkeligheden har meget komplicerede årsager. Teknologi ændrer virkelig verden af og til, og bliver derfor let til en slags totem. Magiske ting med en magisk evne til forandring. Herfra kommer de definitive meldinger om hvordan den digitale virkelighed om 10 år ser ud, nu hvor Google Buzz er kommet. Meldinger om privatlivets snarlige afskaffelse f.eks. Med få megaopfindelser som undtagelse - mikrochippen, mobiltelefonen og internettet i teknologibranchen - bliver det aldrig sådan fremtiden ser på sig selv.

- Der er aldrig nogen der opdager leapfrogging på dag 1 selv om leapfrogging måske er det vigtigste teknologi kan gøre. Mobiltelefonen har da ændret vesten, men i (dele af) Afrika er den forskellen på at have information eller ej, på at have en bank eller ej. Den store udligner. Med Buzz burde vi diskutere hvordan min mor, der har opdaget email, men ikke Facebook og Twitter, nu faktisk er med i et socialt netværk, selvom jeg ikke har vist hende endnu at hun er det. Min mor har pludselig fået glæde af RSS, selvom hun ikke ved det, fordi Buzz omskaber Google Reader til en opmærksomhedskanal med et bredere publikum.

The iPad introductory video has Jonathan Ive waxing poetic on the Arthur C. Clarke chestnut about advanced technology seeming like magic. This is the core of the Apple product promise. Stuff that works super well, with flair and finesse well ahead of the curve.

Magic is even built right into the rhetoric of the Steve Jobs presentations, with the "one more thing" style product reveals.

When the iPhone came out, a reasonable reaction was "can you really build such a thing". One is used to admiring the relentless drive to perfect software and objects, no feature is sacred, everything is expendable is something else can deliver a better experience.

The problem with the iPad is that it doesn't deliver on the product promise. There is simply nothing ungraspable about the device. The screen, the battery, the applications - it's all stuff we understand very well. We're being shown a magic trick that we all know how to do.

Maybe the second version will have the OLED screen and 20 hr battery life this one was supposed to have.

Dengang jeg arbejdede ude i Ascio, snakkede jeg tit med vores systemmand, om sikkerhed. Virus er hvad det er, der er mange, og man skal passe på dem - men fordi virusproducenterne angriber så bredt, så bliver de nye virus også opdaget, og der kommer beskyttelse i antivirussoftwaren. Hvis man virkelig skulle lave noget farligt på nettet, hvis man virkelig skal være bekymret over noget, så skulle det være et determineret angreb, megt snævert, på en organisation. Angriberen ville selv teste sin malware med alle kendte antivirustestere og sikre sig at den ikke blev opdaget af de automatiske systemer. Tilføj lidt social engineering til at få det første angreb til at lykkes; det kræver ikke meget mere end at overtale en sælger til at loade en webside eller en PDF, og så er du igang.

Det lader til at det er nogenlunde er sådan angrebet på Google*, det der fik firmaet til at melde at man måske trækker sig helt fra Kina, er foregået.

Nu skal man ikke tro på alt en antivirusproducent siger om hvor farlig verden er, men det virker som om angrebet er lavet på en forbløffende komplet måde af et forbløffende antal kanaler.

Der hvor aviserne altid ender med at rapportere vrøvl, er på spørgsmålet om hvem, der er i stand til at gennemføre sådan et angreb. I al almindelighed skal der hverken mange penge eller mange mennesker til at lave dem, bare talent. Og hvem ved hvor god kontrol det kinesiske statsapparat egentlig har over dygtige hackere. Det er lidt uklart om Google virkelig tror at angrebet er regeringssponsoreret - måske gik angriberne efter den slags info Google har nægtet at udlevere til Kina, så man må tro at hensigten har været at få fat på dem. Fra nogle af beskrivelserne lyder det nu mere som klassisk industriel spionage.

* der er angrebet et mindre antal andre virksomheder også.

Fredag var jeg turist til en konference om mobiltelefoner i Afrika. Det er et spændende emne; det er fantastisk at det virker, og mobiltelefoner gør alle mulige andre former for leapfrogging muligt, nu man har lavet den teknologiske leapfrogging og lavet telefoni overalt.

Vi lagde fra land med lidt stats, så dem kan I da også få nogle af: Worldwide penetration af mobiltelefoni er ca 2/3; altså ca 2 ud af 3 verdensborgere har en telefon. I afrika er tallet 1/3 - med enorm variation, fordi der er enorm forskel på hvor rige de afrikanske samfund er. Jeg har kun været i ét afrikansk land, et af de fattigere og der var såmænd også telefoner overalt.

Prisen for at bruge telefonen - set i forhold til indkomst - er skyhøj, men sms og telefoni går lige; datatrafik ellers kan man godt glemme at designe efter. Det er kun sponseret brug af det, der virker.

Dagen havde desværre mest lige-om-lidt projekter med, ikke nogle warstories fra etablerede succeser, men der var masser af lektier alligevel.

Lektie 1: Ting ankommer ude af rækkefølge. Det er ikke bare det her med at afrika pludselig er ligeså telefondækket som vesten, der er besynderligt, det er også rækkefølgen af effekter: Teknologien først, inden i den kommer så

- Digitale penge - folk har typisk ikke haft bank eller anden mulighed for at flytte penge sikkert

- Handel - vi hørte fra grundlæggeren af BSLGlobal, en panafrikansk betalings- og handelsplatform

- Regulering - 90% af al handel i afrika er uformel gadehandel, 90% af den slags handel er ureguleret og der betales ikke skat. Digitale betalinger ændrer hele spillet også her.

Så inde i den teknologiske leapfrogging kommer der finansiel leapfrogging, og inde i den pludselig en governance-leapfrogging også. Måske er den måde vi fik www på ikke så anderledes - bare se på de gamle industriers enorme besvær med at forstår netværksbåret information - men det er en tydeligere pointe i den afrikanske setting.

Desværre var det stadig en plan, og ikke en realiseret virkelighed; glæder mig til at følge med i det.

Fra den mere traditionelt indlysende ende, hørte vi (fra Stine Lund) om uddannelse og pleje for gravide og nyfødte med mobiltelefonen som kommunikationssystem. Det handler meget om at lade infrastrukturen rulle sig ud hvor det er nødvendigt; og om at bruge telefonen som digitalt undervisningsmedium. Vanskelighederne i forbindelse med overlevelse og sundhed for gravide og nyfødte er, at dødeligheden ganske vist er høj, men det er stadig særtilfældene man leder efter; det er dyrt eller umuligt bare at rulle en høj standard ud over det hele, så behovet er i bedre diagnostik og rapportering hvor kvinderne bor, og så adgang til hurtigt at eskalere til ordentligt uddannet hjælp når det ikke går som det skal.

Ovennævnte indlæg fra Herman Chinery-Hesse, grundlægger af BSLGlobal, ifølge Tomas Krag, fra refunite.org, der også talte på dagen, virkelig en star i sit hjemland, Ghana, var nok dagens højdepunkt. En af de sjovere pointer var den at international handel i Afrika er nærmest fuldstændig orienteret ud af Afrika, som et ekko af kolonisystemet. Infrastrukturen er lavet lige sådan, men det er ved at ændre sig, og Chinery-Hesse så det næste skridt op som noget, der i høj grad handlede om bare at få Afrika til at fungere som et marked, ligesom EU er et.

Udfordringerne er enorme og meget Afrika-specifikke: Robusthed, adgang til strøm til kommunikation, analfabetisme - altså hvordan tilpasser man teknologien til et marked hvor de handlende ikke kan læse og skrive, transport, regulering. Men omvendt, så er potentialet stort, netop fordi al handel nu er så lokal; værdiefangsten foregår med internationale handlende, meget tæt på råstofkilderne, og meget lidt af forædlingen med tilhørende værdi, foregår i Afrika eller på afrikanske hænder.

Vi hørte også fra en Nokia-finne, om brugerresearch i Østafrika, om hvordan Nokia arbejdede med at bruge de facto distributionen af musik - piratkopiering - som social bærer for nogle af telefonens muligheder. Der manglede lidt detaljer der, men det lød super spændende som idé.

Og vi hørte om hvordan man laver vedligeholdelses- og dieselfrie mobilmaster langt væk fra alfarvej, og sidst, men ikke mindst, antydede Tomas Krag nogle af de teknologiske vanskeligheder ved at tænke på "Afrika" som én ting. Det er et indviklet sted; der er utallige lande, utallige mobilcarriers, utallige sprog, stor mangel på uddannelse og teknologikurven er der, naturligvis, men udviklingen ser anderledes ud, fordi økonomien ser anderledes ud. Man kan ikke planlægge efter hypekurven; men må tænkte et par skridt baglæns og tilpasse sig en enklere verden, som ikke har så meget at byde på teknologisk - udover rigelig besvær - men som til gengæld har en indviklet og levende social struktur.

Det mest interessante v. dagen var næsten at bolden ruller, uden at det har særlig meget med vesten at gøre. Innovationen tilpasset økonomi og teknologi rundt om på kontinentet bliver faktisk lavet, rundt om på kontinentet.

Om sammenhængen mellem regulering og vækst, navnlig for steder, hvor det ikke virker, se - lidt spekulativt måske - Paul Romers TED-talk.

I en dag eller to endnu, kan du også få folks noter fra konf'en ved at søge på Twitter.

Jeg har kigget en smule på fremtidsproblemet vand her på det sidste. Det der fik mig til at tænke på vand var et sci-fi koncept som tegningen ovenfor: Omvendte floder, store pipelines med havvand, der på ruten ind i de mere og mere vandsultne kontinenter destilleres til menneskebrug og til landbrug.

Hvor realistisk er sådan en plan? Det er lidt besværligt at få styr på nogen gode vandtal, men til sidst fandt jeg nogen et af de steder hvor problemet allerede er akut, nemlig Israel

Her bygger man for tiden en serie enorme afsaltningssanlæg der producerer 100 mio. kubikmeter drikkevand per år.

Israels totale tilgængelige vandressource før anlæggene er 2000 mio kubikmeter vand per år - egentlig alt for lidt til den befolkning der bor i området.

Et anlæg der producerer 100 mio kubikmeter, leverer altså 5% oven i den mængde. Man skal altså "bare" bygge 20 af dem for at have en fuld kunstig forsyning - incl. vand til et landbrug, der er nettoeksportør af fødevarer.

Hvor meget energi går der til at producere drikkevand? Hvis man skal gøre det selv uden smart udstyr så må man jo dampe vandet af og kondensere det igen.

Det er meget energikrævende. Det går en kalorie til at varme et gram vand en grad, her skal vi op til kogepunktet, og så også tilføre fordampningsvarmen - ca 5 gange så meget energi som opvarmningen fra 0 til 100.

I de højeffektive afsaltningsanlæg kan man ved at bruge filtrering under højtryk og genanvende den energi man hælder ind komme ned på at bruge 4 kalorier per gram vand, altså energi der svarer til blot at varme vandet fire grader, eller ca. 150 gange mindre end naiv fordampning.

Regnet om til den effekt der kræves til et anlæg i 100 mio kubikmeterklassen er det kun 55MW effekt der forgår. Under en tiendedel af hvad Avedøreværket producerer. Nogenlunde hvad man ville få ud af vindmølleparken i Københavns havn, hvis ellers den producerede på topkapacitet døgnet rundt.

Pointen ved de lidt kedelige omregninger er, at det faktisk lader sig gøre. Man skal ikke æde hele landskabet op med vindmøller, eller bygge 40 kraftværker, for at producere kunstigt vand nok til en hel nation. Et Avedøreværk eller to og 20 destillationsanlæg forsyner et land, hvor der bor omkring 10 mio mennesker.

Hvad angår prisen: Det israelske anlæg sælger vandet til ca en halv dollar per kubikmeter - en pris der dog sikkert varierer en del med prisen på energi. I København koster en kubikmeter vand for en husstand lidt over 40 kr.

En kunstig Jordanflod kunne man altså faktisk godt slippe afsted med at bygge på et par år. Det er ikke en mulighed for de fattigste, men det kan lade sig gøre. Vand til hele Israel for en milliard dollars om året.

Netbooks, so far, haven't really been interesting. They are cheap - and of course that's interesting in and of itself - but they don't really change what you can do in the world. Their battery life, shape, weight and notably software have been much the same as expensive laptops, just with a little less in the value bundle. Which is perfectly fine for 90% of laptop uses.

That's set to change, though. New software, assuming the network, and consumer packaged for simplicity, sociality and "cultural computing" more than "admin and creation" style computing is just about to surface. Fitted with an app-store and simplified, the netbook assumes more an appliance role than a general purpose computing role.

The hardware vendors are adapting to that idea also; moving towards ultra low power consumption and enough battery life that you simply stop thinking about the battery.

Meanwhile, Microsoft is busy squandering this opportunity. They simply don't get this type of environment, apparently - and are intent on office-ifying and desktop-ifying the metaphor. Where Bill Gates "a computer on every desk" was once a vision of not having computing only in corporations and server parks it is now severely limiting. Why do I need a desk to have a computer?

I thought Bing vs Wave makes an interesting comparison. Bing is a rebranding of completely generic search; absolutely nothing new. Not a single feature in the presentation video does anything I don't already have. And yet it's presented in classic Microsoft form as if it was something new and as if these unoriginal product ideas sprang from Microsoft by immaculate conception.

Contrast that to Google Wave, which - if it does something wrong - is overreaching more than underwhelming. And contrast also Wave's internet-born and internet-ready presentation and launch conditions. It's built on an open platform (XMPP aka Jabber). The Wave whitepapers gladly acknowledge the inspiration from research on collaborative creation elsewhere. The protocol is published. A reference implementation will be open sourced. The hosted Wave service will federate. It is a concern for Google (mentioned in presentations) to give third parties equal access to the plugin system - the company acknowledges that internally grown stuff has an initial advantage and is concerned with leveling the playing field.

Does Microsoft have the culture and the skills to make the same kind of move? I'm not suggesting that there's an evil vs nonevil thing here - obviously Google wins by owning important infrastructure - but just that the style of invention in Wave, based on other people's standards and given away so others can again innovate on top of it, seems completely at odds with Microsoft's notion of how you own the stuff you own.

So Wolfram Alpha - much talked about Google killer - is out. It's not really a Google killer - it's more like an oversexed version of the Google Calculator - good to deal with a curated set of questions.

The cooked examples on the site often look great of course, there's stuff you would expect from Mathematica - maths and some physics, but my first hour or two with the service yielded very few answers corresponding to the tasks I set my self.

I figured that one of the strengths in the system was that it has data not pages, so I started asking for population growth by country - did not work. Looking up GDP Denmark historical works but presents meaningless statistics - like a bad college student with a calculator, averaging stuff that should not be averaged. A GDP time series is a growth curve. Mean is meaningless.

Google needs an extra click to get there - but the end result is better.

I tried life expectancy, again I could only compare a few countries - and again, statistics I didn't ask for dominate.

Let's do a head to head, by doing some stuff Google Calculator was built for - unit conversion. 4 feet in meters helpfully over shares and gives me the answer in "rack units" as well. Change the scale to 400 feet and you get the answer in multiples of Noah's Ark (!) + a small compendium of facts from your physics compendium...

OK - enough with the time series and calculator stuff, let's try for just one number lookup: Rain in Sahara. Sadly Wolfram has made a decision: Rain and Sahara are both movie titles, so this must be about movies. Let's compare with Google. This is one of those cases where people would look at the Google answer and conclude we need a real database. The Google page gives a relief organisation that uses "rain in sahara" poetically, to mean relief - and a Swiss rockband - but as we saw Wolfram sadly concluded that Rain + Sahara are movies, so no database help there.

I try to correct my search strategy to how much rain in sahara which fails hilariously by informing me that no, the movie "Rain" is not part of the movie Sahara. Same approach on Google works well.

I begin to see the problem. Wolfram Alpha seems locked in a genius trap, supposing that we are looking for The Answer and that there is one, and that the problem at hand is to deliver The Answer and nothing else. That model of knowledge is just wrong, as the Sahara case demonstrates.

The over sharing (length in Noah's Ark units) when The Answer is at hand doesn't help either, even if it is good nerdy entertainment.

Final task: major cities in Denmark. The answer: We don't know The Answer for that - we have "some answers" but not The Answer, so we're not going to tell you anything at all.

Very few questions are really formulas to compute an answer. And that's what Wolfram Alpha is: A calculator of Answers.

Ubicomp er den gamle drøm om beregning i alting - og her er et virkelig godt slideshow, der diskuterer om vi ikke har fået det allerede uden at lægge mærke til det - i iPods og snedige telefoner og uventede remixes af virkelighedsdata med webdata. Man får virkelig aktiveret tankerne her.

When we built Imity - bluetooth autodetecting social network for your cell phone - we did - of course - get the occasional "big brother"-y comment about how we were building the surveillance society. We were always very careful to not frame the application as being about that, careful with the language, hoping to foster a culture that didn't approach the service on those terms. We never got the traction to see whether our cultural setup was sufficient to keep the use on the terms we wanted, but it was still important to have the right cultural idea about what the technology was for, to curb the most paranoid thinking about potentials.

It's simply not a reasonable thing to ask of new technology, that it should be harm-proof. Nothing worthwhile is. Cars aren't. Knives aren't. Why would high-tech ever be. And just where in the narrative of some future disaster does the backtracking to find the harm end? Computers and the internet are routinely blamed for all kinds of wrongdoing, whereas the clothing, roads, vehicles and other pre-digital artifacts surrounding something bad routinely are not.

What matters is the culture of use around the technology, whether there is a culture of reasonable use or just a culture of unreasonable use. And you simply cannot infer the culture from the technology. Culture does not grow from the technology. It just does not work that way.

I think a lot of the internet disbelief wrt. to The Pirate Bay verdicts comes from basically missing this point. "But then Google is infringing as well" floats around. But the important thing here is that Pirate Bay is largely a culture of sharing illegally copied content whereas Google is largely a culture of finding information.

I think it's important to keep culture in mind - because that in turn sets technology free to grow. We can't blame technology for any potential future harm; we'll just have to not do harm with it in the future - but the flip side of course is that responsibility remains with us.

I haven't read the verdict, but the post verdict press conference focused squarely on organization, behaviour and economics of what actual crossed the Pirate Bay search engine, which seems sound.

- that being said, copyright owners are still squandering the digital opportunity by not coming up with new ways of distribution better suited for the digital world, but the internet response wrt. The Pirate Bay that they just couldn't be quilty, for technological reasons, does not really seem solid to me, if we are to reason in a healthy manner about technology and society at all.

The What You Want-Web got a number of power boosts this week.

- iTunes, the online music hegemony, is now fully DRM-free. Differentiated pricing so far means mostly a price bump to $1.29 (says reports), but have you seen App Store prices lately? iTunes is now thoroughly lock-in free.

- Spotify, the most disruptive challenge to the above hegemony, launched an API, the only plausible response to the open source despotify library that surfaced a little while ago. It is Linux only so far, but that has to be a temporary condition. Spotify still has DRM and probably needs a different level of bargaining power to make the argument that it does not matter anymore.

- Google App Engine announced Java support, and not just Java, but full JVM support. This essentially announces Javascript, Groovy, Scala support as well (one would expect Lift soon), and - as important as more languages - better DB interop. Specific tools for importing and exporting data from the App Engine.

The What-You-Want Web is my just-coined phrase for the lock-in free, non-value-bundled, disintermediated, higly competitive computation, api, and experience fabric one could hope the web is evolving towards. Twitter already lives there, nice to see some more people join.

The important thing about all of these announcements is that they forgo a number of options for making money off free/cheap: Lowering the friction towards zero means the services have to succeed on their own merits. If they fail to offer what I need or want, I can just leave. I don't have to buy into the platform promise of any of these tools, I can just get the stuff that has value to me.

I think in 5 years we will remember Twitter largely as the first radically open company on the web. Considering the high availability search and good APIs, there literally is no aspect of your life on Twitter that you can't take with you.

P.S. (Also, three cheers for Polarrose, launching flickr/facebook-face recognition today. A company adding decisive value with unique technology, born to take advantage of the WYW-Web.)

Pretty good overview of what's wrong with URL shorteners. They destroy the link space, adds brittle infrastructure run by who knows who. We already know that the real value proposition is traffic measurement - i.e. selling your privacy short.

The problem of course is the obvious utility of shorteners.

This is all new stuff, the current state of the art is not how it is going to end.

I går var jeg i Århus, primært for at se Enter Action på Aros, men også for at hilse på bekendte og besøge NEXT expoen*.

Enter Action er afgjort et besøg værd, endda en rejse værd. Udstillingen er godt nok lidt ujævn, efter min mening, og bærer stadigvæk vidnesbyrd om hvor svært teknologi som materiale er, det kniber stadig en smule med at komme forbi materialet til et udtryk, selv i sådan et creme de la creme setup som her. Nu er teknologi jo i mellemtiden blevet så almindeligt omkring os, så det fint går ind som selve materien i udstillingen også, og det hjælper bestemt lidt på det. Flere af værkerne gør en dyd ud af den synlige teknik.

I enestående grad bedst på udstillingen er Listening Post (video af værket her), der med lyd, mørke og en monumental opsætning af digital tekst hentet fra nettet, sagtens kunne bruges til en hel dags besøg.

Måske har det lidt med hele det her med at rejse efter kunsten også at gøre. Listening Post, i modsætning til de fleste andre værker på udstillingen, har et kultisk monumentalt præg, der fungerer godt som mål for rejsen. Cærket er naturligvis allerede gennempræmieret.

Noget, der så ud som om det kunne være fantastisk, de robotiserede rullestole, fungerede ikke rigtig mens jeg var hos dem, til gengæld var jeg glad for at en virkelig energisk teenager med god balance, gav en flot demo af det utrolige visuelle løbebånd. Jeg havde ikke selv holdt i 30 sekunder.

Mest irriterende på udstillingen: Et kedeligt rum med net-art i browsere. Blah. Aros skal naturligvis linke til det på hjemmesiden, men det er altså ikke noget der funker på stedet. (og dog, jeg må ikke glemme at der er et so last year Second Life rum også).

Mest overraskende på udstillingen: Mit hjerte slår, hvis Pulse Room står til troende, i valsetakt.

Bonus Aros-anbefaling: Gå ikke glip af Lars Arrhenius' skeletvideo et par etager højere oppe. Der er en Tim Burtonsk grum humor over den skeletale hverdag i Stockholm.

NEXT udstillingen var udemærket, men ikke en tur værd i sig selv. Ikke helt så cutting edge som tidligere, men betydeligt mere bred - materialevidenskab og andre ingeniøragtige indslag var også med. Det var dejligt at se The Orb, Wiremap og Cellphone Disco i virkeligheden, men som den sætning også antyder, så er det ikke let at være NEXT i blog-monokulturen. Mange af de objekter, udstillingen kan finde frem, har vi alle set på nettet.

Og så lige en pet peeve: Hvorfor står der en Z-machine printer på udstillingen hvert år, når den ikke kører og printer udstillingsgæsternes egne 3D-kreationer?

Og hvorfor var der ikke nogen Siftables?

* Konference skuffede mig totalt med sin mangel på servietorigami til lunchcam'et, men ellers var Matt Webbs indlæg (og det med naturen) som jeg så på videostrøm, virkelig godt - sorry Nikolaj, men jeg nåede ikke at se din preso.

Kunstig Intelligens er som regel noget med elegante søgealgoritmer. Man har en masse data, og vil gerne vide noget om dem, og så bruger man en af en række af elegante søgealgoritmer; der er forskellige snit - brute force, optimale gæt, tilfældige gæt. Inde i kernen af sådan en algoritme ligger der en test, der viser om man har fundet det man ledte efter.

Det er jo enkelt nok at forstå, når man ikke bare kigger på det magiske resultat. Mere punket bliver det, når testen der er kernen i algoritmen, udføres af en laboratorierobot. Altså af en rigtig fysiske maskine, der arbejder med rigtig fysiske biologiske systemer i laboratoriet.

Sådanne maskiner findes faktisk, ihvertfald en af dem. Og den har lige haft et gennembrud og isoleret et sæt gener, der kodede for et enzym, man ikke kendte den genetiske kilde til.

Wiredartiklen har mange flere detaljer, hvad der gør det ekstra trist at vide at Wired Online lige er blevet skåret drastisk ned af en sparekniv.

Jeg sidder og læser transcriptet af idésessionen til Raiders of The Lost Ark. Steven Spielberg og George Lucas briefer Lawrence Kasdan om historie og personer, som Lucas i forvejen har udarbejdet sammen med Philip Kaufman.

Det slående ved at læse historien er, at ingen af deltagerne interesserer sig det mindste for andet end maximal spænding og underholdning på en realistisk måde. Der er ikke så meget opmærksomhed på andet end at det skal blive en fantastisk oplevelse. Og billedet af underholdning er helt igennem bygget på hvordan man så selv modtager historien. Der er meget få indre mål, meget lidt obstruktion hvad angår hvordan det skal gennemføres. Det handler kun om at få underholdningen til at lykkes.

De problemer der er til diskussion er bare "Kan man forstå det?" "Bliver det kedeligt?".

For ikke så lang tid siden snakkede jeg med et par dygtige konsulenter (Kim og Ebbe herfra) om deres tidligere arbejde i spilindustrien. Vi snakkede om optimering, og hvordan de af og til havde ting, der skulle laves som tog nogle millisekunder - men stadig alt for lang tid. Spillet skal jo holde en høj framerate, for at oplevelsen er der, og så er millisekunder pludselig dyre.

- Der er noget sundt ved at have oplevelsen som sin eneste succes. Det eliminerer en masse undskyldninger om hvad man skal bede folk om for at nå sine egne mål, eller deres mål. "Nyttigt" eller "vigtigt" giver stadig plads til masser af kompromiser i oplevelsen. Når oplevelsen er det hele, så er der ikke andet end brugerens fornøjelse med det man har lavet. Det er sundt på to måder: Dels eliminerer det dårlige undskyldninger. Dels så kommer man helt udover kanten. Fra bare "nyttigt" til "fornøjelse" er der et langt og vigtigt skridt.

The Chrome Experiments seem like an odd departure for Google. All Google properties seem to focus on fundamentals, not experience, but here you have a group of experiments that are pretty much exclusively about experience. Also, what happened to white?

Of course the experiences highlight that fundamentals make a difference: Casey Reas' blog post seems very on the mark to me.

Chrome and its fast JavaScript capability offers a glimpse of a Web without proprietary plug-ins.

[...]

Technically, I think the greatest innovation of Chrome is launching each Window or Tab as a separate process. If you try to run Twitch on Firefox it starts to slow down as more windows open. Each mini-game competes for the same resources from the computer's processor. In Chrome, because each window runs separately, the frame rate remains high.

Don't miss out on Sascha Pohflepp & Karsten Schmidt's socialcollider (which, sadly, sucks performance wise in Firefox)

All of this goes to show that technology is never done. There's always more to do, something different to do. Search is not done either. Where the money goes is not done.

ARToolkit has been around for ages (10 years), but until quite recently was sadly locked up in C and C++ libraries. Recently it has been completely liberated and implemented in Flash, Processing, Java, C# and now runs on any toaster. The power of that is just huge. The usual first instinct with ARtoolkit is "Oh look at this virtual stuff on top of my real stuff. Then you have to suppress a yawn. But now you have all of these platforms and people with a completely new perspective start using ARToolkit in completely different ways.

The T-shirts are nice, but actually ARToolkit is not a good match, since it's not really a 2d code, but pretrained recognition (read the whole thing, Claus - they added 2d barcodes on top of the FLAR-pattern).

The calibrate and forget on a fixed surface works well.

The one I really think is brilliant is using non-recognition as the signal, with recognition as the steady state.

The ARToolkit code really benefits from coming out into the fresh air of Processing and Flash. New people with fresh energy to experiment and different ideas about what its for.

If you are doing print on demand, this fingerprinting technique lets you fingerprint the actual paper you're printing on. The ultimate document DRM.

Industriel produktion er ved at blive social - jo mere social produktion bliver, jo flere sociale værktøjer får vi til at producere med.

Monome er en populær minimalistisk digital kontroldims til musikere og andre kreative mennesker. Eller, populær er måske så meget sagt, for den er kun lavet i et par meget små oplag - at producere storvolumen elektronik er dyrt og risikabelt. Til gengæld er det den eneste måde at lave elektronik billigt på, så man er lidt fanget i en "sjældent eller risikabelt" fælde. Man kan enten tabe penge, eller arbejde meget dyrere.